When considering cybersecurity, two things often come to mind nowadays: news of someone being hacked and discussions on whether generated code is good or bad for our security posture. Here are just a few examples:

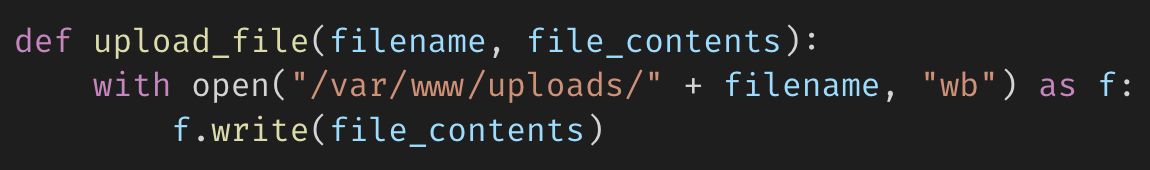

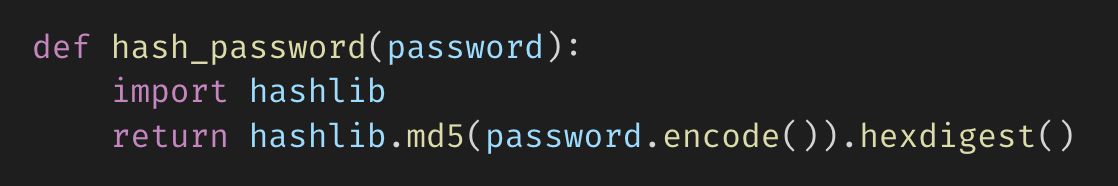

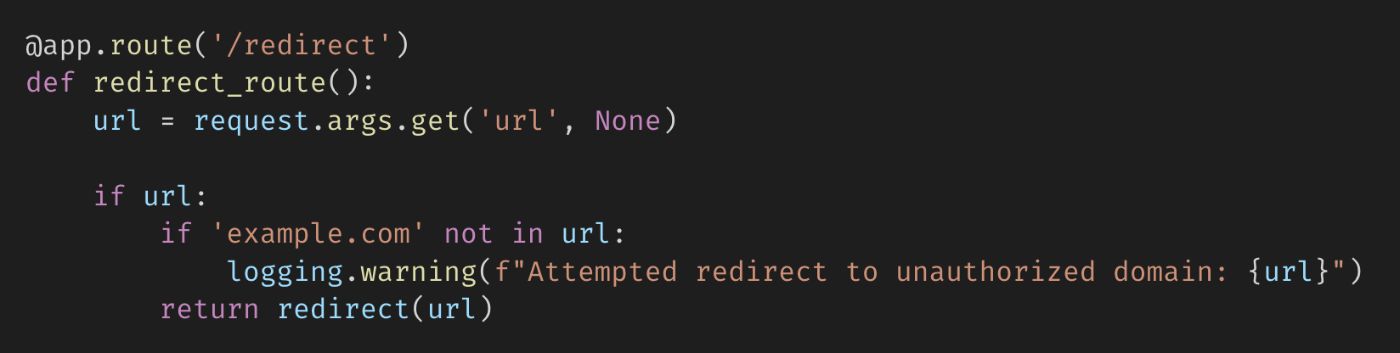

Indeed, generated code may contain vulnerabilities that could sneak in during the review process:

Even software engineers have been caught by malicious VSCode extensions. As stated in the research work:

- "1,283 extensions that include known malicious dependencies packaged in them with a combined total of 229 million installs"

- "8161 extensions that communicate with a hardcoded IP address from JS code"

- "1,452 extensions that run an unknown executable binary or DLL on the host machine"

Could new tools help improve the situation? If so, what kind of tools?

Let's digest the problems to establish the context in which they should operate. Some of them have existed for a long time, and some that have cropped up more recently:

- prioritizing short release cycles over quality and safety

- engineers often miss bugs in their own code due to bias and difficulty guessing unusual use cases

- fragmented knowledge of the code base among team members can result in contradictions, bugs, and vulnerabilities

- there are not enough engineers well versed in cybersecurity

- many security tools have not received proper adoption

- shortage of cybersecurity workers

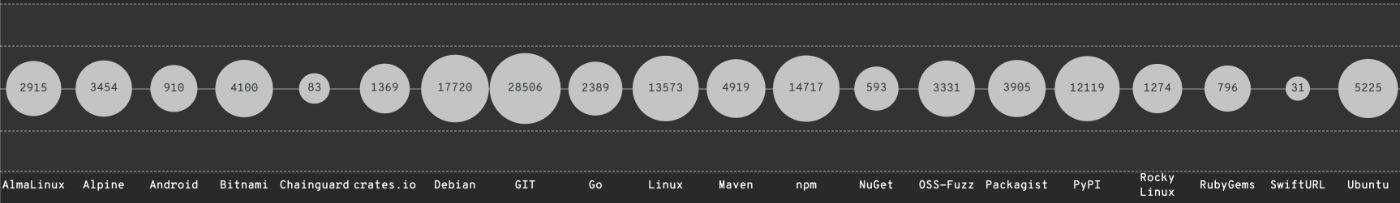

- software supply chain risks are difficult to manage, and the scale of the problem is difficult to comprehend

- rising adoption of low-code/no-code solutions results in the generation of a large amount of code with unknown quality, which is hidden behind a higher level of abstraction

At the same time, I think that AI both accelerates and aggravates mentioned problems due to:

- it lowers the barrier to entry into development

- coding copilots accelerate code base growth

- models will continue to improve (not necessarily in terms of code quality and safety), resulting in broader adoption and even more generated code

I think new tools would be very helpful, if not necessary, for addressing these problems and driving improvement.

Considering all the above, let's establish a set of essential characteristics that new tools must have:

- they are development tools with security features

- they derive a functional description of the product from the code and provide a convenient UI/UX for working with this knowledge

- they find inconsistencies, bugs, and vulnerabilities

- they generate tests to prove found bugs and vulnerabilities

- they have a certain set of expert knowledge (for example, access to tons of write-ups on certain vulnerabilities, etc.)

- they suggest patches to fix problems in the code and functionality of the product

Just a few examples of such tools that have already started to emerge:

- LLM-guided fuzzers

- Next-gen IDEs

- Hackbots based on LLMs and vulnerabilities expertise

Frankly, I have no idea what these tools will look like. But I really hope we will have a wide range of tools with such functionality.

My idea is simple: code generated by programs or in collaboration with programs should be tested, hacked, and fixed by other programs.

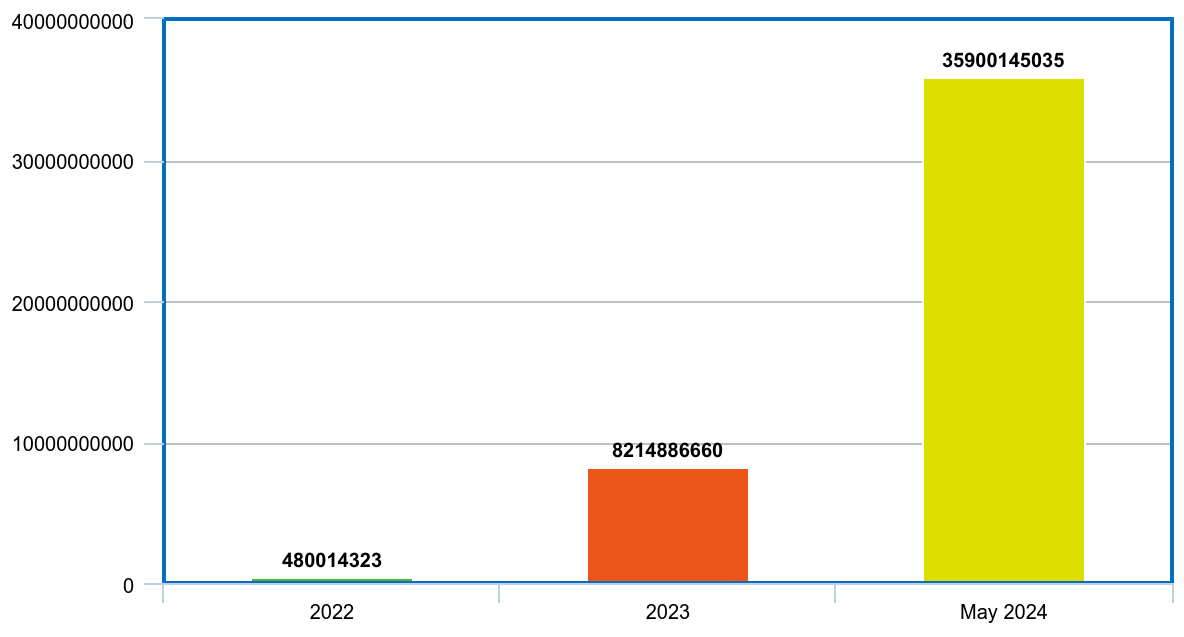

Why? Well, because it is escalating quickly (data for 2022, 2023, up to May 2024).

It seems like we're reaching a point where the situation should start to change, and I am excited to see a world where code generated by programs gets hacked and patched by other programs.

Thanks for your attention!

👋

P.S. If you enjoyed this post, please consider connecting with me on X or LinkedIn.